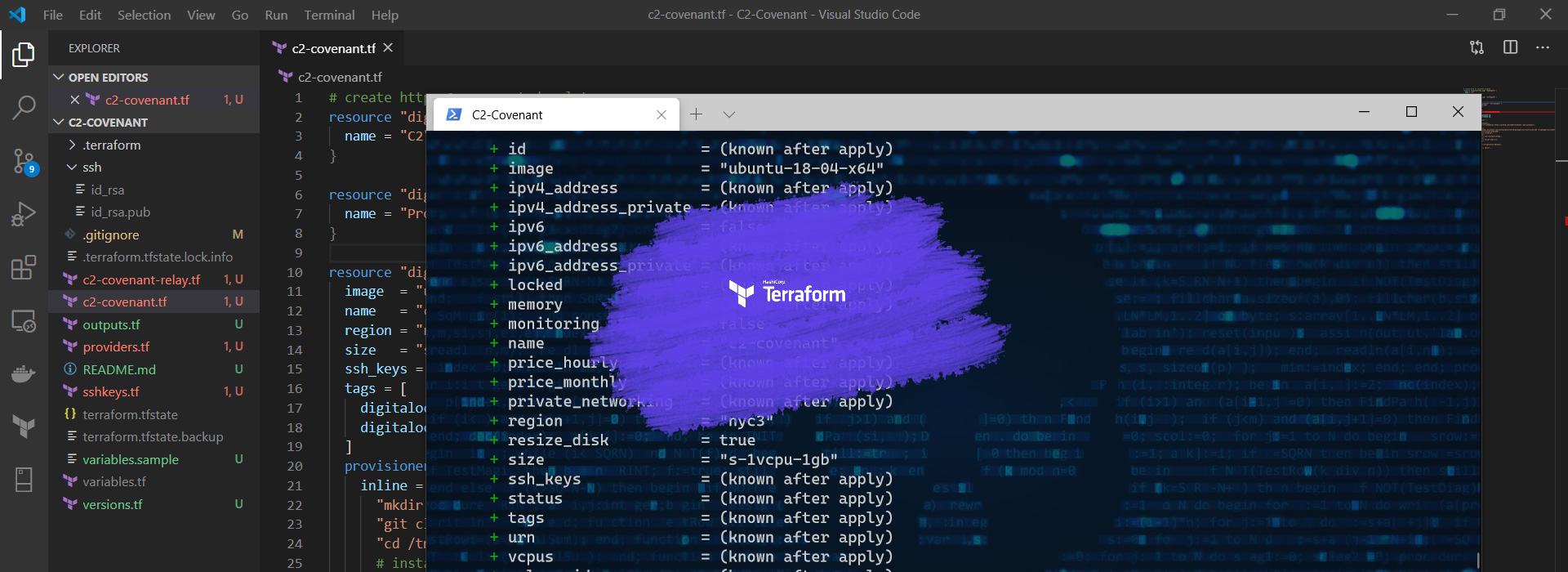

Terraform - Running from a Docker Container

The official terraform container has its entry point set to the terraform binaries. It means that every time you need to invoke the terraform command the container is initialized and subsequently stopped

However, my plan is not to connect to the docker host and then use the docker run ... command every time a resource is deployed. But, the container should stay up all the time. Allowingus to connect and manage resources when needed.

My terraform container will run using the macvlan network driver and will behave as if connected to the network. This article describes in more details how the network is structured

Although not recommended, my use case requires that we SSH into the container and not the docker host creating a relative isolation from the host.

1) Creating the Terraform Docker Container

Let's create the docker-compose file to build our custom image.

version: "3"

services:

terraform:

container_name: terraform

image: terraform:Dockerfile

ports:

- "22:22/tcp"

environment:

TZ: 'Europe/London'

hostname: terraform

entrypoint:

- /usr/bin/tail

command: -f /dev/null

dns:

- 192.168.65.1

networks:

macvlan65:

ipv4_address: 192.168.65.30

restart: always

networks:

macvlan65:

external: trueThe changes we need to mention here are the entrypoint that has been altered from terraform to tail. When the command property is present in a docker-compose file its contents are passed as arguments to the entrypoint and we are using tail to keep the container up.

Below we have a basic Dockerfile to build our image.

# Use the official terraform image as a base.

# It is recommend to specify a version and not use latest for production.

ARG version=1.1.8

FROM hashicorp/terraform:$version

# Install custom software.If we run the commands below the container will start and stay up.

docker build --no-cache --pull -t "terraform:Dockerfile" .docker-compose up -d2) Adding openssh Server to the Container

Add the lines below to our Dockerfile and rebuild the image.

# ...

# Install openssh & openrc to manage services

RUN apk add --update --no-cache openrc openssh \

&& rc-status \

# openrc requires this file when not initialized during boot.

&& touch /run/openrc/softlevel \

# Starting the SSH server

&& rc-service sshd start- Creating a new user

We now need to create a new user to SSH in and use terraform. The user creation has a few caveats.

First, we need to create an user on the host machine and note down its user and group ID. You might be asking, why? We will be mounting volumes from the host into our container, these volumes will make persistent the SSH keys and configurations.

The permissions set on content in the volume are identical from the perspective of host and container and what matters here are only user and group ID. Make sure to match your container user and group IP with the host user.

# ...

# Both has to match the host's, otherwise permission issues will happen.

ARG PUID=973

ARG PGID=973

# Creating a new user for terraform and SSH

RUN adduser -h /home/$USERNAME -s /bin/sh -u $PUID -g $PGID -D $USERNAME

# Setting a temp password to the new user

RUN echo -n terraform:$TEMP_PASSWORD | chpasswd

# Define the user commands will be run as

USER $USERNAME

# Create the SSH key folder

RUN mkdir /home/$USERNAME/.ssh/ \

&& chmod 0700 /home/$USERNAME/.ssh/Adjust the permissions of the mapped folder and generate the key pair.

ssh-keygen -b 4096 -t rsa -C "HV1-VM104-DOCKER-TERRAFORM" -N -vCreate a file named authorized_keys in the .ssh folder and paste your public in it.

- Setting terraform and root password

Create a .sh file and add the following:

#!/bin/sh

echo 'terraform:YOUR_PASSWORD' | chpasswd

echo 'root:YOUR_PASSWORD' | chpasswdWe need to copy the file to our container, execute it and make sure to delete afterwards.

...

# Script to set root and terraform passwords.

# echo -n USER:PASSWORD | chpasswd

COPY ./02.export-passwords.sh /

RUN /bin/sh /02.export-passwords.sh

# Delete the file with the passwords

RUN rm /02.export-passwords.sh

...- Add terraform to the sudoers

We need to add our terraform user to the sudoers file adding the line below to our Dockerfile.

...

# Add terraform to the sudoers file.

RUN touch /etc/sudoers.d/terraform \

&& echo "terraform ALL=(ALL:ALL) ALL" > /etc/sudoers.d/terraform

...3) Creating the Persistent Volumes

Add the following volume lines to your docker-compose file:

...

volumes:

- ./home/terraform:/home/terraform:rw

- ./home/terraform/.ssh:/home/terraform/.ssh:ro

- ./etc/ssh:/etc/ssh

...We will use /etc/ssh to store our openssh-server configuration and the /home folder to manage our ssh authorized keys, terraform projects and create the .profile file to export our environment variables.

The final Dockerfile and docker-compose.yml can be checked below.

# Use the official terrafor image as a base.

# It is recommend to specify a version and not use latest for production.

ARG VERSION=1.1.8

FROM hashicorp/terraform:$VERSION

# User

ARG USERNAME="terraform"

# Users passwords

#ENV ROOT_PASSWORD=''

#ENV USER_PASSWORD=''

# User ID & Group ID

# Both has to match the host's, otherwise permission issues will happen.

ARG PUID=973

ARG PGID=973

LABEL maintainer="Tiago Stoco"

# Install openssh & openrc to manage services

RUN apk add --update --no-cache openrc openssh sudo \

&& rc-status \

# openrc requires this file when not initialized during boot.

&& touch /run/openrc/softlevel \

# Starting the SSH server

&& rc-service sshd -v restart \

&& rc-status

# Creating a new user for terraform and SSH

RUN adduser -h /home/$USERNAME -s /bin/sh -u $PUID -g $PGID -D $USERNAME

# Define the user commands will be run as

USER $USERNAME

# Create the SSH key folder

RUN mkdir /home/$USERNAME/.ssh/ \

&& chmod 0700 /home/$USERNAME/.ssh/

USER root

# Enable root user.

RUN passwd -u root

# Script to set root and terraform passwords.

# echo -n USER:PASSWORD | chpasswd

COPY ./02.export-passwords.sh /

RUN /bin/sh /02.export-passwords.sh

# Delete the file with the passwords

RUN rm /02.export-passwords.sh

# Add terraform to the sudoers file.

RUN touch /etc/sudoers.d/terraform \

&& echo "terraform ALL=(ALL:ALL) ALL" > /etc/sudoers.d/terraform

# Script to restart the sshd service due to crash during build.

# and set user passwords

ENTRYPOINT ["/entrypoint.sh"]

# Open SSH port.

EXPOSE 22

# Copy the entrypoint script to the container's root.

COPY ./entrypoint.sh /version: "3"

services:

terraform:

container_name: terraform

image: terraform:Dockerfile

ports:

- "22:22/tcp"

environment:

TZ: 'Europe/London'

USER_NAME: terraform

volumes:

- ./home/terraform:/home/terraform:rw

- ./home/terraform/.ssh:/home/terraform/.ssh:ro

- ./etc/ssh:/etc/ssh

hostname: terraform

dns:

- 192.168.65.1

networks:

macvlan65:

ipv4_address: 192.168.65.30

restart: always

networks:

macvlan65:

external: trueThe file structure of the project looks like:

.

├── 01.destroy-terraform-container.sh

├── 02.export-passwords.sh

├── docker-compose.yml

├── Dockerfile

├── entrypoint.sh

├── etc

│ └── ssh

│ ├── moduli

│ ├── ssh_config

│ ├── sshd_config

│ ├── ssh_host_dsa_key

│ ├── ssh_host_dsa_key.pub

│ ├── ssh_host_ecdsa_key

│ ├── ssh_host_ecdsa_key.pub

│ ├── ssh_host_ed25519_key

│ ├── ssh_host_ed25519_key.pub

│ ├── ssh_host_rsa_key

│ └── ssh_host_rsa_key.pub

├── .gitignore

└── home

└── terraform

├── .ash_history

├── .profile

├── .ssh

│ ├── authorized_keys

│ └── public.key

├── .terraform.d

│ ├── checkpoint_cache

│ └── checkpoint_signature

└── tucana

└── hv1

└── proxmox

├── connection-test.tfplan

├── main.tf

├── terraform.tfstate

└── tf-plugin-proxmox.log

83 directories, 116 filesConclusion

With the steps below, we have a container with persistent storage to store our projects and connect to our resources.

Keep tuned because in the next article we will learn how to create a proxy for our terraform container that will make debugging easier when we start to create our Infrastructure as code.

Resources

https://www.cyberciti.biz/faq/how-to-install-openssh-server-on-alpine-linux-including-docker/

![Infoitech - [B]logging](https://blog.infoitech.co.uk/content/images/2021/04/youtube-small-cover-1.png)